Over the past year, the term “AI agent” has flooded vendor decks, social posts, and conference stages. Most come with a familiar promise: deploy the agent, point it at a problem, and watch the efficiency gains roll in.

But in production, reality looks different. AI agents that lack a deep understanding of the organization’s systems, data, and operating rules tend to drift, producing irrelevant outputs, breaking workflows, or triggering compliance risks.

In other words, it’s the absence of context. Without adequate contextual grounding, AI agents can’t deliver reliable, workflow-specific outcomes at scale. And without that, adoption stalls long before the business realizes any return.

Why Context Engineering Matters

The move from AI agent prototypes to production deployments has sparked a clear theme in technical circles: context is the infrastructure that makes agents viable in the enterprise. As Douwe Kiela, CEO & Co-founder of Contextual AI, puts it, “Context engineering has become the critical bottleneck for enterprise AI… The difference between AI experiments and transformative business impact.”

Aaron Levie, CEO of Box, adds, “To get [AI agents] into the middle of an enterprise workflow requires significant scaffolding, domain understanding, and context engineering. This is the play.”

This is the emerging consensus from the people building at the edge: without engineered context, the most advanced models will fail under real-world data complexity, compliance requirements, and workflow integration demands. But how is context defined when it comes to enterprise AI agents?

What “Context” Really Means in Enterprise AI

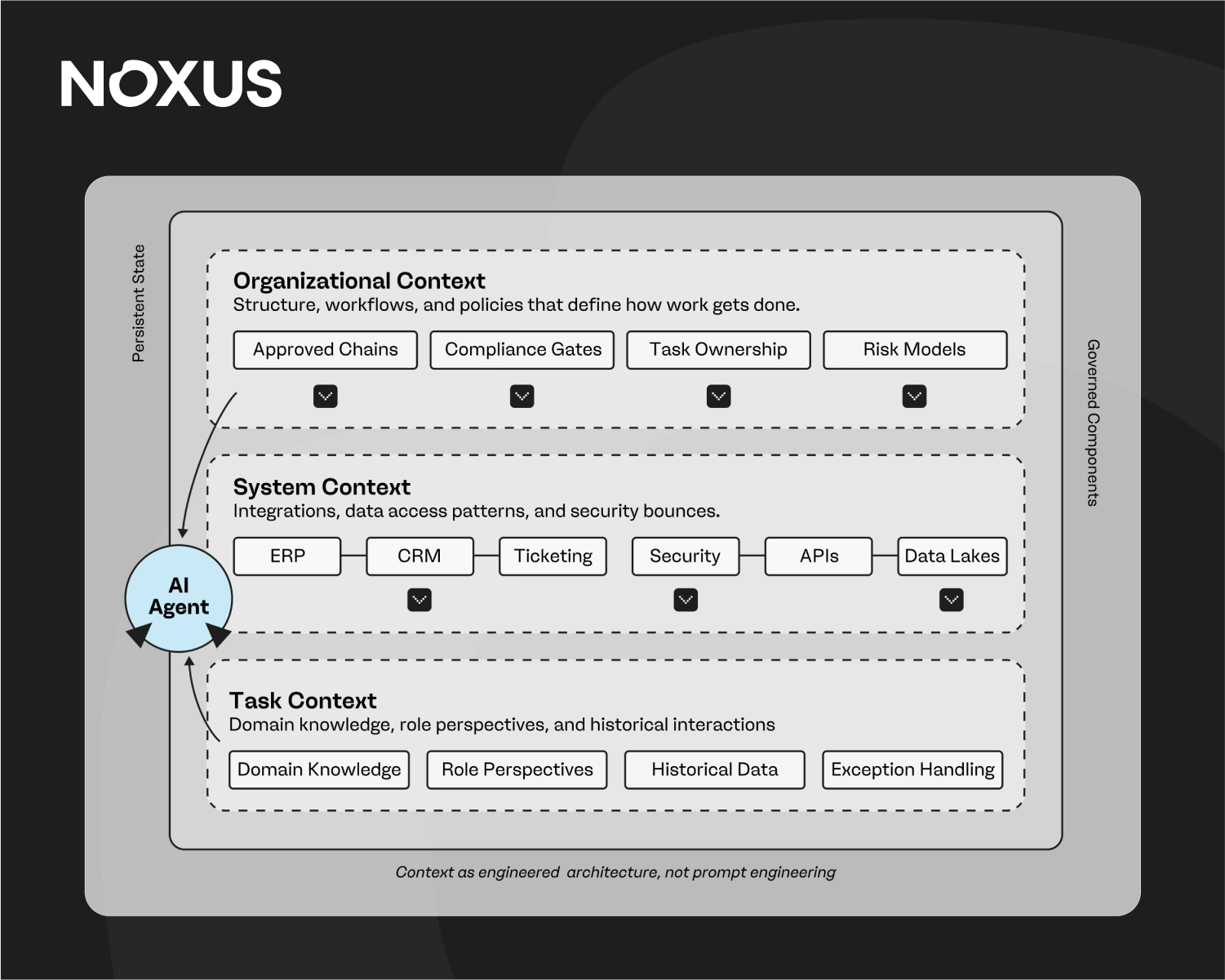

In a consumer setting, “context” often means the last few messages in a chat or a handful of recent interactions. In the enterprise, it’s a much heavier construct; we like to think of it as a layered state across systems, workloads, and compliance boundaries, a bit like this:

For AI agents operating in production, context spans three distinct dimensions:

- Organizational context: The structure, workflows, and policies that define how work gets done. This includes approval chains, compliance gates, and task ownership models that the AI agent must respect to avoid operational risk.

- System context: The integrations, data access patterns, and security boundaries across ERP, CRM, ticketing, and custom platforms. An agent that doesn’t “know” where to fetch truth, or how to push updates back in, becomes a silo rather than a collaborator.

- Task context: The domain-specific knowledge, role-based perspectives, and historical interactions that inform accurate and efficient task execution. Without this, agents repeat errors, miss dependencies, and fail to adapt to exceptions.

In practice, this is far more than a prompt-engineering challenge. Context in the enterprise is a persistent, evolving state that must be engineered into the agent’s architecture, then captured, updated, and governed.

Context as a Core Technical Discipline

For enterprise AI agents, context determines whether automation stays controlled or drifts into risk. Without robust contextual grounding, three failure modes dominate:

- Trust loss: Outputs that contradict policy, ignore current data, or miss workflow dependencies erode confidence. Once operators stop relying on the agent, adoption stalls.

- Compliance breaches: Context-blind agents can process data outside approved regions or skip required approvals. In regulated domains, this is a direct violation.

- Governance gaps: Lacking engineered context, teams cannot explain or reproduce agent decisions. This blocks auditability and weakens oversight.

When context is deliberately engineered, these risks shrink. Persistent, structured context keeps agents aligned with enterprise rules, preserves decision history, and makes outputs both reliable and defensible. This discipline is often the deciding factor between pilots that stall and systems that scale.

Challenges of contextual integration at scale

Embedding deep organizational context into AI agents can be an ongoing process. In large enterprises, we see several friction points come up time and again:

#1. Siloed data and fragmented integration layers

Critical context often lives across multiple systems of record: ERP, CRM, ticketing, document repositories, and bespoke internal tools. Without unified access, agents operate with partial visibility, leading to incomplete or inconsistent outputs.

#2. Context drift

As workflows, policies, and data sources evolve, agents that aren’t continuously updated begin to operate on stale assumptions. Over time, this degrades accuracy and increases operational risk.

#3. Security and compliance boundaries

In multi-agent deployments, ensuring that each agent only accesses the data it is authorized to see, while still having enough context to perform, demands strict RBAC, ACL mapping, and integration with enterprise identity systems.

#4. Performance under load

Richer context models often mean larger retrieval sets, more complex orchestration, and higher computational overhead. Without efficient scaling mechanisms in place, latency and cost can spiral as deployments grow.

Solving these challenges requires treating context as an evolving part of the infrastructure. It needs to be seen as a governed, observable pipeline that adapts with the enterprise.

Proven Patterns for Engineering Context

Successful enterprise AI agents share a common trait: context is designed into their architecture from the outset. The following patterns are best practice approaches for technical teams looking to achieve that:

- Contextual memory and state management: Maintain persistent, role-specific memory that spans sessions, workflows, and even model updates. This ensures agents retain relevant history without introducing context bloat that slows execution.

- Adaptive data chunking & Retrieval-Augmented Generation (RAG): Use dynamic chunk sizes and relevance scoring to retrieve only the most relevant context while minimizing token usage and latency.

- Dynamic model routing: Select the optimal model or inference path based on the type of context required, for example, routing compliance-sensitive tasks through models with stricter guardrails.

- Failover and human escalation: When context is insufficient or confidence falls below a threshold, route the task to human review, preserving the full context package for rapid decision-making.

- Embedding business logic: Codify operational rules, exception handling, and approval flows directly into the agent’s orchestration layer so it operates within the same constraints as human teams.

How Noxus Embeds Context by Design

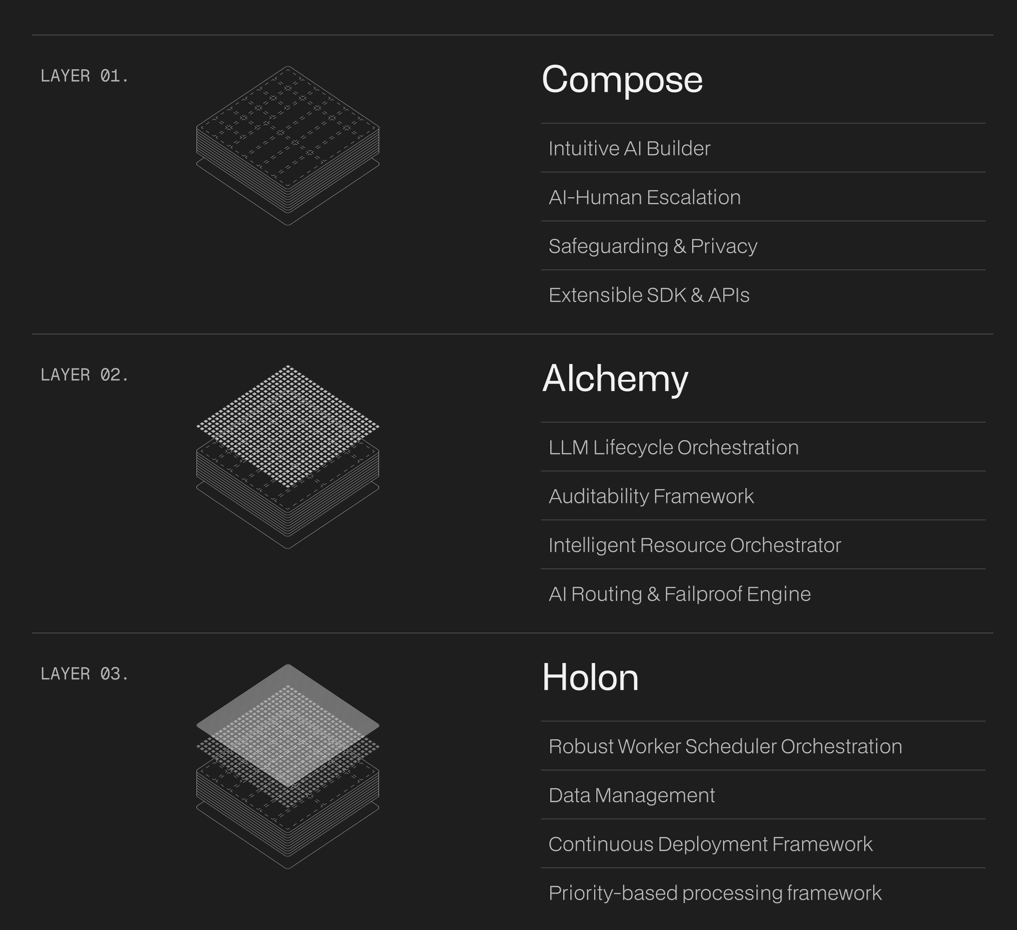

Noxus was engineered with context as a first-class requirement. Our three-layer architecture embeds contextual awareness from workflow design through to execution at scale:

- Compose layer: A no/low-code environment for building and configuring agents that can integrate directly with enterprise systems (SAP, Salesforce, ServiceNow, Azure, Google Workspace). This layer governs access through RBAC and ACL mapping, ensuring each agent inherits the proper organizational and system context for its role.

- Alchemy layer: Manages the orchestration and lifecycle of models with context-rich routing, retrieval, and optimization. Dynamic model routing, failover strategies, and RAG pipelines ensure every decision is made with the most relevant and up-to-date information available.

- Holon layer: Provides the distributed compute and storage backbone to make context persist and scale. Liquid storage tiers keep high-priority context in-memory while moving less critical state to hot or cold storage. Infrastructure-as-code deployment supports cloud, hybrid, and on-prem environments without compromising data sovereignty.

This architecture ensures that context is embedded at build time and continuously updated, observable, and auditable across the agent’s lifecycle. All of which is a prerequisite for delivering high-trust outcomes in production.

Make AI agents Enterprise-ready Through Context

By making organizational, system, and task context a first-class citizen in the architecture, technical leaders can deploy agents that are accurate, compliant, and operationally trusted from day one, and keep them that way as the enterprise evolves.

Noxus was built for exactly this challenge. Our SDK-first platform, orchestration engine, and deployment flexibility make context-rich agent design a repeatable, scalable process, not a custom integration project each time.

If you’re ready to see what context-aware agents look like in a real enterprise environment, book a technical walkthrough with our team.